Introduction

Having recognized the potential of the stock volatility decomposition method introduced by Brogaard et al. (2022, RFS) in my previous blog, I will show how to implement this method to empower your own research in this blog.

For readers with time constraints, the codes for implementing this variance decomposition method can be approched via this link.

As replicating Brogaard et al. (2022, RFS) requires some manipulations on the VAR estimation outputs, I took some time to figure out the theory and estimation of the reduced-form VAR coefficients, Impulse response functions (IRFs), structural IRFS, orthogonalized IRFs, and variance decomposition and summarized what I’ve got in a three-blog series about VAR.

In the first blog, I show the basic logics of VAR model with the simplest 2-variable, 1-lag VAR model. In the second blog, I show how to use var and svar commands to conveniently estimate the VAR model in Stata. In the third blog, I dig deeper, show the theoretical definitions and calculation formula of major outputs in VAR model, and manually calculate them in Stata to thoroughly uncover the black box of the VAR estimation.

For this blog, I will only focus on the paper-specific ideas. Readers who need more background information about VAR estimation can find clues in my three-blog series about VAR.

Data and Sample

The sample used by Brogaard et al. (2022, RFS) consists of all common stocks listed on the NYSE, AMEX, and NASDAQ spanning from 1960 to 2015. Estimation of the VAR model requires daily data on stock returns, market returns, and dollar-signed stock trading volumes.

The reduced-form VAR model below is estimated in stock-year level. $$ \begin{aligned} &r_{m, t}=a_0^*+\sum_{l=1}^5 a_{1, l}^* r_{m, t-l}+\sum_{l=1}^5 a_{2, l}^* x_{t-l}+\sum_{l=1}^5 a_{3, l}^* r_{t-l}+e_{r_m, t} \\\ &x_t=b_0^*+\sum_{l=1}^5 b_{1, l}^* r_{m, t-l}+\sum_{l=1}^5 b_{2, l}^* x_{t-l}+\sum_{l=1}^5 b_{3, l}^* r_{t-l}+e_{x, t} \\\ &r_t=c_0^*+\sum_{l=1}^5 c_{1, l}^* r_{m, t-l}+\sum_{l=1}^5 c_{2, l}^* x_{t-l}+\sum_{l=1}^5 c_{3, l}^* r_{t-l}+e_{r, t} \end{aligned} \tag{1} $$ where

- \(r_{m,t}\) is the market return, the corresponding innovation \(\varepsilon_{r_{m,t}}\) represents innovations in market-wide information

- \(x_t\) is the signed dollar volume of trading in the given stock, the corresponding innovation \(\varepsilon_{x,t}\) represents innovations in firm-specific private information

- \(r_t\) is the stock return, the corresponding innovation \(\varepsilon_{r,t}\) represents innovations in firm-specific public information

- the authors assume that \(\{\varepsilon_{r_m, t}, \varepsilon_{x, t}, \varepsilon_{r, t}\}\) are contemporaneously uncorrelated

Download Data

To better serve my research purpose, in this blog I will implement the stock-year level variance decomposition for all common stocks listed on the NYSE, AMEX, and NASDAQ spanning from 2005 to 2021.

The SAS code for downloading the data is as follows. I first log in the WRDS server in SAS. Then I download the daily stock price, trading volume, return, and market return for all common stocks listed on the NYSE, AMEX, and NASDAQ - that’s exactly what the CRSP got. For ease of importing into Stata, I transfer all the downloaded sas dataset into csv format. As the daily CRSP data is too huge, I implement all the above procedures year by year.

|

|

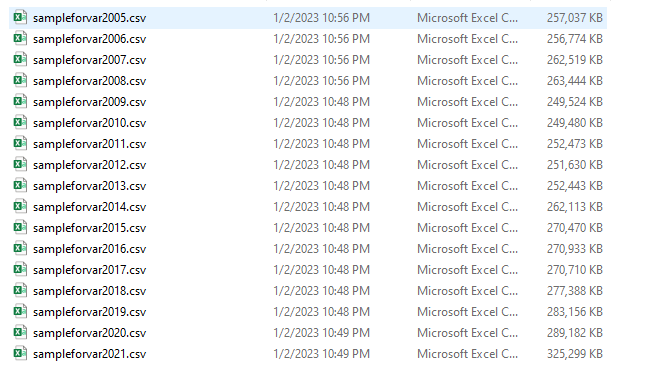

The output of this step is as follows. The raw data for each year is stored in csv file named as sampleforvar + year.

Clean Data

We have two tasks in this step.

- generate the 3 variables

rm,x, andrfor VAR estimation - set time series, which is the prerequisite for using the

svarcommand

Following Brogaard et al. (2022, RFS), the 3 variables for VAR estimation is constructed as follows.

- I use Equal-Weighted Return (

ewretdin CRSP) in basis points as market returnrm - I use the daily Holding Period Return (

retin CRSP) in basis points as stock returnr - I use the daily signed dollar volume in $ thousands as stock order flow

x- The daily signed dollar volume is defined as the product of daily stock price (

prcin CRSP), trading volume (volin CRSP), and the sign of the stocks’ daily return

- The daily signed dollar volume is defined as the product of daily stock price (

To mitigate the impacts of outliers, I winsorized all the above variables at the 5% and 95% levels.

I set the index of trading days within a given stock-year to tag the time series.

The Stata codes are as follows. Note that I use the cusip and year as identifiers. For the convenience of looping over stocks, I generate a unique number cusipcode for each stock.

The output in this step is the yearly dataset named as sampledata +year that is ready for the implementation of the VAR estimation in stock-year level.

|

|

Extract Estimation Unit and Set Global Variables

As the VAR estimation is implemented in stock-year level, we need firstly extract the sample for each stock and year with the identifiers cusip and year. All the subsequent manipulations are functioning in the single stock-year dataset as follows.

|

|

In this step, I also set two global variables that will be repeatedly used in the subsequent procedures.

- the variable names in the VAR system

names - the number of observations in the dataset unit

rownum

The codes for this step are as follows.

Implement Brogaard Decomposition

For now, we’ve collected all the necessary variables, and get the data ready for Brogaard decomposition. Before we actually start to estimate, I would like to provide a big picture for implementing the Brogaard decomposition in a single stock-year dataset.

The tasks we’re going to resolve are as follows.

-

Estimate the reduced-form VAR in Equation (1), saving the residuals \(e\) and variance/covariance matrix of residuals \(\Sigma_e\)

-

Estimate matrix \(B\), which specifies the contemporaneous effects among variables in the VAR system

-

Estimate the structural shocks \(\epsilon_t\) and their variance-covariance matrix \(\Sigma_\epsilon\)

-

Estimate the 15-step cumulative structural IRFs \(\theta_{rm}\), \(\theta_x\), \(\theta_r\), which represent the (permanent) cumulative return responses of stock return \(r\) to unit structural shocks \(\varepsilon_{r_m, t}, \varepsilon_{x, t}, \varepsilon_{r, t}\) respectively

-

Combine the estimated variances of the structural innovations from step 3 with the long-run responses from step 4 to get the variance components of each information source using the following formula. $$ \begin{aligned} \text { MktInfo } &=\theta_{r_m}^2 \sigma_{\varepsilon_{r_m}}^2 \\\ \text { PrivateInfo } &=\theta_x^2 \sigma_{\varepsilon_x}^2 \\\ \text { PublicInfo } &=\theta_r^2 \sigma_{\varepsilon_r}^2. \end{aligned} $$

-

Estimate the contemporaneous noise term with the following Equation $$ \Delta s = r_t-a_0-\theta_{rm}\epsilon_{rm,t}-\theta_x\epsilon_{x,t}-\theta_r\epsilon_{r,t} $$ As we’re only interested in the variance of \(\Delta s\), which is by construct the variance from noise, we can ignore the constant term \(a_0\) and use the variance of \(\Delta s^*\) to represent the noise variance instead, where $$ Noise = \sigma_s^2=Var(\Delta s)=Var(\Delta s^*)\\\ \Delta s^*=r_t-\theta_{rm}\epsilon_{rm,t}-\theta_x\epsilon_{x,t}-\theta_r\epsilon_{r,t} $$

-

Get variance shares by normalizing these variance components $$ \begin{aligned} \text { MktInfoShare } &=\theta_{r_m}^2 \sigma_{\varepsilon_{r_m}}^2 /(\sigma_w^2+\sigma_s^2 )\\\ \text { PrivateInfoShare } &=\theta_{x}^2 \sigma_{\varepsilon_x}^2 /(\sigma_w^2+\sigma_s^2 ) \\\ \text { PublicInfoShare } &=\theta_r^2 \sigma_{\varepsilon_r}^2 /(\sigma_w^2+\sigma_s^2 ) \\\ \text { NoiseShare } &=\sigma_s^2 /(\sigma_w^2+\sigma_s^2 ) . \end{aligned} \notag $$

where \(\sigma_w^2\) represents the sum of all information-based components in stock return volatility. $$ \sigma_w^2 =\theta_{r_m}^2 \sigma_{\varepsilon_{r_m}}^2 +\theta_{x}^2 \sigma_{\varepsilon_{x}}^2 +\theta_{r}^2 \sigma_{\varepsilon_{r}}^2 $$

Step 1: Estimate VAR Coefficients, Matrix \(B\), \(\epsilon_t\), \(\Sigma_e\), and \(\Sigma_\epsilon\)

I set the lag order as 5 to keep consistent with Broggard et al. (2022, RFS) and then I use svar model to estimate the VAR model, imposing a Cholesky type restriction to contemporaneous matrix \(B\) as mentioned in the paper.

The readers can see details about the matrix \(B\) in Dig into Estimation of VAR Coefficients, IRFs, and Variance Decomposition in Stata and see why svar command can directly estimate it in Estimations of VAR, IRFs, and Variance Decomposition in Stata.

The codes for estimating the reduced-form model are as follows.

The svar command stores the matrix \(B\) as e(A), the coefficient matrix as e(b_var) and the variance/covariance matrix of residuals \(\Sigma_e\) as e(Sigma). So I didn’t estimate them once more but just take them directly from the results of svar estimation.

I adjust the freedom of variance/covariance matrix of residuals generated from svar command sigma_hat from \(n-p\) to \(n-p-1\) and name the adjusted variance/covariance matrix as sigma_e, where \(n\) is the total number of the observations in the dataset and \(p\) is the number of lag orders, which is set as 5 following the paper. It shouldn’t make much difference if the readers ignore this step. Note that as preidcting residuals needs \(p\)-lag variables, the residuals \(e\) by definition lose freedom of \(p\).

By definition,

$$

\epsilon_t=Be_t

$$

That also implies

$$

\Sigma_\epsilon=B\Sigma_eB’

$$

With above formulas, we can calculate the structural shocks \(\epsilon_t\) and their variance/covariance matrix \(\Sigma_\epsilon\) as follows. I stored the structural shocks in a matrix named epsilons and the variance/covariance matrix of structural shocks in a matrix named sigma_epsilon.

|

|

Step 2: Estimate 15-step cumulative structural IRFs \(\theta_{rm}\), \(\theta_x\), and \(\theta_r\)

While the svar command can produce the results for IRFs, Orthogonalized IRFs, and Orthogonalized Structural IRFs automatically, what we need are the un-orthogonalized Structural IRFs.

Procedures for estimating \(\theta\)

The \(\theta\)s, which are the 15-step cumulative un-orthogonalized Structural IRFs, can be quickly and conveniently calculated via the following procedures (please see more details in Dig into Estimation of VAR Coefficients, IRFs, and Variance Decomposition in Stata).

- calculate the IRFs \(\Phi_i(i=1,2,3…,15)\) following the following formula, where \(k\) is the number of variables in the VAR system. \(A_j\) is the \(j\)-lag coefficient matrix for the reduced-form VAR

$$ \Phi_0 = I_k\\\ \Phi_i = \Sigma_{j=1}^{i}\Phi_{i-j}A_j \tag{2} $$

-

post-multiply IRF \(\Phi_i\) with \(B^{-1}\) to get (un-orthogonalized) structural IRFs \(\Lambda_i\) for each forward-looking step \(i=1,2,3,..,15\) $$ \Lambda_i =\Phi_iB^{-1} \tag{3} $$

-

Sum all the 15-step (un-orthogonalized) structural IRFs \(\Lambda_i\) to obtain the cumulative structural IRFs.

-

As we are only interested in the 15-step cumulative structural IRFs functioning on the stock returns, which are specified in the third equation in the VAR system, the \(\theta_{rm}\), \(\theta_x\), and \(\theta_r\) lie on the third row of the 15-step cumulative structural IRF matrix.

Obtain coefficient matrix \(A_j(j=1,2,…,5)\)

To implement the above procedures, the first thing we need to get are the reduced-form coefficient matrix \(A_j(j=1,2,…,5)\). As we have obtained all the reduce-form coefficients with svar command and stored them in a matrix coef in the last step, we don’t have to compute them again but just need to reshape the matrix coef into the shape we need.

The coefficient matrix we currently have coef is a \(1\times 48\) matrix. I first reshape it to a \(3\times 16\) matrix named newcoef, where each row contains the coefficients for one equation in the VAR system. Within each row, the coefficients are ordered with fixed rules: the coefficients for the first variable rm with 1 to 5 lags, the coefficients for the second variable x with 1 to 5 lags, the coefficients for the third variable x with 1 to 5 lags, and the constant for the corresponding equation. That implies, the coefficients for the same lag can always be found every 5 columns.

With the above observations, I generated matrix \(A_1\) to \(A_5\) with the following codes.

|

|

I list the 3-lag coefficient matrix \(A_3\) as an example to show the desired format of coefficient matrix \(A_1\) to \(A_5\). For the \(ij\)-th element of the matrix \(A_j\), it represents the impact of one-unit reduced-form shock \(e_{jt}\) on the Equation with variable \(i\) as dependent variable.

Calculate IRFs and cumulative un-orthogonalized Structural IRFs

To this stage, we’ve made it clear about the formulas of calculating the IRFs and un-orthogonalized Structural IRFs (please see Equation (2) and (3)) and obtained all the necessary ingredients (coefficient matrix \(A_i\) and matrix \(B\)) for the calculations.

The codes for calculating IRFs and cumulative un-orthogonalized Structural IRFs are as follows. I summed up all the un-orthogonalized Structural IRF matrix step by step to get the 15-step cumulative un-orthogonalized Structural IRFs and name it as csirf.

|

|

Extract \(\theta\)

The 15-step cumulative un-orthogonalized Structural IRF matrix csirf is as follows. The \(ij\)-th element of this matrix represents the permanent (cumulative) impact of one-unit structural shock \(\epsilon_{j,t}\) on the \(i\)-th Equation in the VAR system.

By definition, the elements in the 3rd row of the matrix csirf are \(\theta_{rm}\), \(\theta_x\), and \(\theta_r\) respectively.

Thus, we can extract thetas from the matrix csirf and save the thetas into a new matrix named theta.

Step 3: Calculate noise term

As we’ve discussed in the road map, the noise variance is given by the following formula. $$ Noise = \sigma_s^2=Var(\Delta s)=Var(\Delta s^*)\\\ \Delta s^*=r_t-\theta_{rm}\epsilon_{rm,t}-\theta_x\epsilon_{x,t}-\theta_r\epsilon_{r,t} $$ Intuitively, we need to first calculate \(\Delta s^*\) by substracting the combinations of structural shocks \(\epsilon_t\) and the permanent impact of structural shocks on stock returns \(\theta\) from the contemporaneous stock return \(r_t\).

As we’ve saved the structural shocks \(\epsilon_t\) in a matrix named epsilons and the permanent impact of structural shocks on stock returns \(\theta\) in a matrix named theta, the contemporaneous noise term \(\Delta s^*\) can be calculated with the following codes, where I save the noise term into a matrix named delta_s.

To more conveniently produce the variance of the noise term, I saved the noise term matrix delta_s into a new column named delta_s in the dataset.

Step 4: Calculate the variance from each component

Till now, we’ve collected all the ingredients needed to compute the variance contribution of all the four components defined by the Brogaard et al. (2022, RFS).

Firstly, we calculate the variance contribution from three-types of information with the following formula.

$$

\begin{aligned} \text { MktInfo } &=\theta_{r_m}^2 \sigma_{\varepsilon_{r_m}}^2 \\\ \text { PrivateInfo } &=\theta_x^2 \sigma_{\varepsilon_x}^2 \\\ \text { PublicInfo } &=\theta_r^2 \sigma_{\varepsilon_r}^2. \end{aligned}

$$

As we’ve saved the thetas in a matrix theta and the variance/covariance matrix of structural shocks \(\epsilon_t\) into a matrix sigma_epsilon, we can calculate the information-based variances as follows.

|

|

Note that I put all the variance components along with the related parameters \(\theta\) and \(\sigma_\epsilon\) into a new matrix named brogaard. This matrix looks like as follows.

Secondly, I calculate the variance contribution from noise, which is proxied by the variance of \(\Delta_s^*\) we’ve calculated above. Of course, I add the noise variance into the result matrix brogaard.

After this step, we’ve figured out the variance contribution from each component defined by the Brogaard paper and saved them into the result matrix brogaard.

The final result matrix brogaard is as follows.

Step 5: Calculate variance contribution

To more conveniently calculate the variance contribution, I saved the result matrix brogaard into the dataset. I follow the following formula to calculate the variance contribution of each component and save the percentages into a new variable named varpct.

$$

\begin{aligned}

\text { MktInfoShare } &=\theta_{r_m}^2 \sigma_{\varepsilon_{r_m}}^2 /(\sigma_w^2+\sigma_s^2 )\\\

\text { PrivateInfoShare } &=\theta_{x}^2 \sigma_{\varepsilon_x}^2 /(\sigma_w^2+\sigma_s^2 ) \\\

\text { PublicInfoShare } &=\theta_r^2 \sigma_{\varepsilon_r}^2 /(\sigma_w^2+\sigma_s^2 ) \\\

\text { NoiseShare } &=\sigma_s^2 /(\sigma_w^2+\sigma_s^2 ) .

\end{aligned} \notag

$$

where \(\sigma_w^2\) represents the sum of all information-based components in stock return volatility.

$$

\sigma_w^2 =\theta_{r_m}^2 \sigma_{\varepsilon_{r_m}}^2 +\theta_{x}^2 \sigma_{\varepsilon_{x}}^2 +\theta_{r}^2 \sigma_{\varepsilon_{r}}^2

$$

The saved dataset is as follows.

|

|

Pack codes

Remember the Broggard decomposition is implemented in stock-year level. That means we need to loop over the above codes over the daily observations of each stock in each year. That requires an efficient packing of the codes.

There are two issues worth noted in the packing procedures.

- I require there are at least 50 observations for the estimation of the VAR model

- otherwise, the VAR estimation doesn’t converge or lacks vaidility with too few freedoms

- I require the estimation of VAR model converges

- otherwise, it’s not possible to get converged residuals, which are the prerequisite for the subsequent calculations

|

|

Loop over sample

I run the packed code over stocks in each year and collected all the results for different years together. Then I reshaped the dataset into panel data. The codes are as follows.

|

|

The final outcome is as follows.

|

|

Conclusion

In this blog, I replicated the stock volatility decomposition method introduced by Brogaard et al. (2022, RFS). Given the potential of this information-based decomposition method as I’ve discussed in Theory for the information-based decomposition of stock price, I hope this blog can help the readers make use of this method to empower their own research.