Contents

Motivation

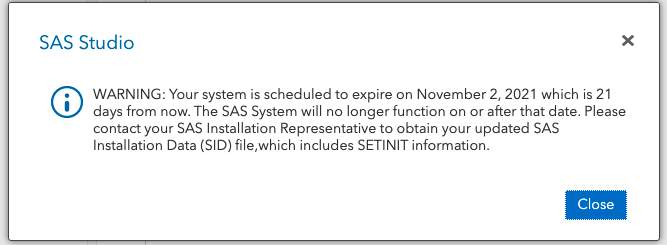

The WRDS-SAS Studio seems to be suspended soon. As a big fan of this cloud platform, I have to find something alternative to make my data extraction from WRDS replicable. Compiled from a series of WRDS guidances (you can find them in the Reference part), this blog will introduce how to exploit WRDS cloud via Python.

The biggest advantage of WRDS Cloud is that you don’t have to download everything to your computer but just deal with the massive data using the computing source of WRDS Cloud. Following this blog, one can quickly establish a python-wrds cloud workflow without checking various manuals.

Connect to WRDS using SSH

Mac OS

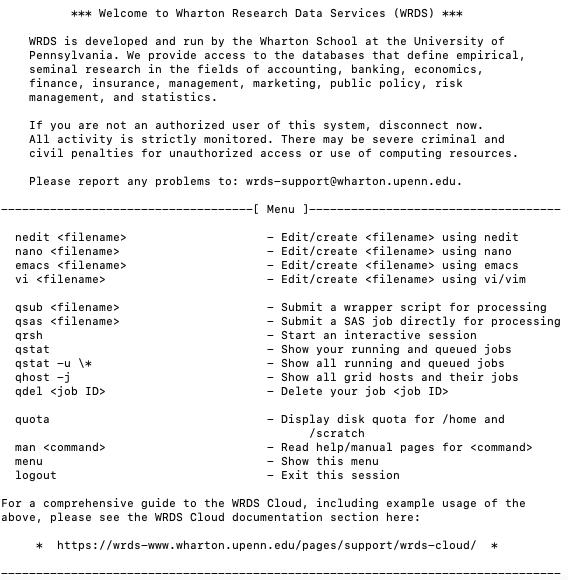

MacOS computers come with a utility called Terminal that has SSH functionality built-in that can be used to connect to the WRDS Cloud. You can initiate an SSH connection to the WRDS Cloud as follows:

- Type

ssh wrdsusername@wrds-cloud.wharton.upenn.eduwhere wrdsusername is your WRDS username: the same username you use to log onto the WRDS website. - When prompted, enter your WRDS password.

- Once you have connected to the WRDS Cloud, you will be given a prompt – which indicates that the server is ready for your commands.

- You can disconnect from the WRDS Cloud at anytime by typing

logoutor using the key combination CTL-D.

Windows

If you are on Windows, you will need to download and install SSH client software (e.g., PuTTY, WinSCP) to your computer.

Once you have downloaded and installed one of the above, you can initiate an SSH connection to the WRDS Cloud. Suppose you use PuTTY and want to configure PuTTY for SSH:

- In Host Name, enter

wrds-cloud.wharton.upenn.edu - In Port (skip if there is no Port field), enter

22 - When prompted, enter your WRDS username.

- When prompted, enter your WRDS password.

Open IPython in the Cloud

Interactive Python jobs allow you to run code in serial, and receive a response to each command as you enter it. To run interactive Python jobs, you will need to schedule an interactive job with the WRDS Cloud Grid Engine by entering qrsh and then enter ipython3 to enter an interactive python enviornment, which is exactly what you get in Jupyter. Don’t forget to insert pip install wrds before you connect to the WRDS library list.

|

|

Initiating a WRDS connection in Python

First, as with every Python program that intends to connect to WRDS, you must import the wrds module. Then you can make the connection and set up a pgpass file.

Explore WRDS Libraries and Tables

You can analyze the structure of the data through its metadata using the wrds module, as outlined in the following steps:

-

List all available libraries at WRDS using

list_libraries() -

Select a library to work with, and list all available datasets within that library using

list_tables() -

Select a dataset, and list all available variables (column headers) within that dataset using

describe_table()

|

|

Submit Queries

Now that you know how to query the metadata and understand the structure of the data, you are ready to query WRDS data directly. The wrds module provides several methods that are useful in gathering data:

get_table()- fetches data by matching library and dataset, with the ability to filter using different parameters. This is the easiest method of accessing data.raw_sql()- executes a SQL query against the specified library and dataset, allowing for highly-granular data queries.get_row_count()- returns the number of rows in a given dataset.

For parameters, and further explanation of each, use the built-in help: e.g., help(db.get_table).

Here are some examples.

|

|

Transfer Data through Dropbox

Files in your WRDS Cloud

Insert ls in your ssh command line, you will find the files you’ve saved in your Wrds Cloud. Seems that you have got the files you desire but the files are stored in WRDS Cloud. The next step is to determine how to exchange files between your personal computer and the WRDS Cloud.

After testing several alternatives (e.g., adopt an FTP server Cyberduck), I personally found linking WRDS with Dropbox is the most efficient way to do this job. Transferring files using SCP is also a good idea but it doesn’t automatically backup your workflows as Dropbox does.

Data Storage Locations

Having a big picture about how WRDS Cloud stores our files can make our file transferring job more intuitive. As the WRDS Cloud is a shared resource, with many hundreds of users accessing at any one time, this storage space is managed by a quota. Typically, there are two separate quota for your, depending on the storage locations and how long the WRDS Cloud keeps the data for you. Type quota in your command line, you will find how much storage you’ve used.

|

|

Permanent Storage - Home Directory

All users are given 10 GB dedicated permanent storage in their home directory in the WRDS Cloud.

Your home directory is located at:

/home/[group name]/[username]

- You may store up to 10GB of data in this directory.

- Files in this directory are never deleted by WRDS.

- Files in this directory are backed up via snapshots created on a regular basis (see the File Recovery section, below)

Note: To determine your group name, use the id command on the WRDS Cloud: the name in the second set or parenthesis is your group name. From my case below, it’s easy to find my group name in WRDS is frankfurt

Temporary Storage - Scratch Directory

All subscribing institutions are given 500 GB shared temporary storage in the scratch directory. Generally, users should direct your larger output result data to this directory for staging before downloading, or before loading into subsequent programs. The scratch directory is located at:

/scratch/[group name]

- Members of your institution may store up to 500GB of data in this directory, shared between all members.

- Files in your shared scratch directory are deleted after one week (168 hours).

- Files in this directory are not backed up anywhere, and should be downloaded shortly after being generated by your programs.

Link WRDS with Dropbox

WRDS provides the dbxcli command on the WRDS cloud login nodes to transfer data directly between WRDS and your dropbox shares. dbxcli is a simple, ftp like transfer client; it only transfers files between dropbox and clients.

To do this, you need:

-

Login to the WRDS cloud via ssh

- If you are in the IPython enviornment, you need to insert

quitto quit IPython, and thenlogoutto exit the Interactive Jobs

- If you are in the IPython enviornment, you need to insert

-

Run the

dbxcli accountcommand.1 2 3 4 5 6 7 8 9 10 11 12 13[xumj2019@wrds-cloud-login2-w ~]$ dbxcli account 1. Go to https://www.dropbox.com/1/oauth2/authorize?client_id=07o23gulcj8qi69&response_type=code&state=state 2. Click "Allow" (you might have to log in first). 3. Copy the authorization code. Enter the authorization code here: NMdc-gHdd7AAAAAAAAAEL3CRL_GpJce0RQ Logged in as xx xx <xx@xx.com> Account Id: dbid:AAB4yqaueMTEfU4-8 Account Type: pro Locale: en Referral Link: https://www.dropbox.com/referrals/AABnCSjxOA?src=app9-934508 Profile Photo Url: https://dl-web.dropbox.com/account_photo/get/dbaphid%3AAAAgMQf5azIHq65no?size=128x128&vers=1561290642421 Paired Account: false -

Follow the instructions the command gives and paste in the authorization code.

Figure 3: Connect WRDS Cloud to Dropbox -

If you successfully logged in the command should print your dropbox account information. You may run

dbxcli accountagain to confirm if your account is connected, if it prints your account information it is connected.

Once you’ve successfully link the wrds with your Dropbpox account, you could create a new folder in the Dropbox, say testdir1013

|

|

At the same time, you could also create a directory in your institution’s scratch volume with a unique name. I would use xmj

|

|

Transfer Files From WRDS to Dropbox

Files can be transferred to Dropbox with the put subcommand.

-

Make a directory in your institution’s scratch volume with a unique name, such as your username. (I’ve introduced this part in Data Storage Locations section)

-

Run your job and save your output to this directory. For example, I save a csv file

test1013.csvand a graph filetestgraph1013.pnginto the scratch direcory I’ve created before /scratch/frankfurt/xmj/1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21In [7]: import matplotlib.pyplot as plt In [8]: data = db.raw_sql("select date,dji from djones.djdaily", date_cols=['dat ...: e'], index_col=['date']) In [9]: plt.plot(data) Out[9]: [<matplotlib.lines.Line2D at 0x2ba61364ad90>] In [10]: plt.xlabel('date') Out[10]: Text(0.5, 0, 'date') In [11]: plt.ylabel('dji') Out[11]: Text(0, 0.5, 'dji') In [12]: plt.show() In [13]: plt.show() In [14]: plt.savefig('/scratch/frankfurt/xmj/testgraph1013.png') In [15]: data.to_csv("/scratch/frankfurt/xmj/test1013.csv") -

When you are done go to the login node and

cdto your scratch directory. -

Once here transfer your file(s) from WRDS to Dropbox with

dbxcli put.Then you will find the files show up in your Dropbox

Figure 4: Transfer Files From WRDS to Dropbox

Transfer Files From Dropbox to WRDS

Files can be transferred to WRDS Cloud with the get subcommand.

-

cdto your scratch directory or home directory, depending on whether you want the files to be stored permanently or temporarily in the Cloud.1[xumj2019@wrds-cloud-login2-w xmj]$ cd /scratch/frankfurt/xmj/ -

Once here transfer your file(s) to WRDS with

dbxcli get. With the following code, I can transfer thetest.csvlocated in my Dropbox root folder to the scratch volume. Typelsin the WRDS command line, and one will find thetest.csvhave been successfully listed in my scatch directory.

Batch Jobs on the WRDS Cloud

The WRDS Cloud supports two job types:

- Interactive jobs are commonly used to practice with a programming language, submit simple, smaller queries, or to test expected outcomes before writing a larger program.

- Batch jobs are generally long-running research programs that assemble larger data samples and perform more CPU-intensive data manipulation.

I’ve introduced the command-line Interactive jobs in the previous sections. In this section, one can learn how to submit batch jobs via an example borrowed from Gen Li’s blog.

Gen Li provides a series of SAS codes to extract TAQ data from WRDS cloud for a list of companies on particular dates during specific time periods. One can download the SAS codes via this link. I will execute WRDS_batch_ticker_ct.sas to show how a batched job works in WRDS cloud.

The input of this batched job is a txt file evt_taqinput.txt which contains two columns, Ticker and Time Stamp. The output of this job is a dta file ticker_ct.dta, which should appear in your WRDS home directory if the batched job is executed successfully.

|

|

To conduct the batch job, one needs to:

-

Upload to your WRDS cloud the txt file that includes ticker and date for which you need TAQ data

(One can learn how to exchange files with WRDS cloud in the section Transfer Data through Dropbox)

I uploaded a txt file named

evt_taqinput. To save the execution time, I just include one line:AAPL 20141205 15:30:00

-

Upload to your WRDS cloud the code file “WRDS_batch.sas”. (Please remember to change to your txt file path in the code). Following the steps mentioned before, I’ve uploaded the SAS code

WRDS_batch_ticker_ct.sas -

Connect to your WRDS Cloud via SSH (See section Connect to WRDS using SSH).

-

Submit command on your terminal:

-

Check the status of your batched job:

1 2 3 4 5[xumj2019@wrds-cloud-login2-w ~]$ qstat job-ID prior name user state submit/start at queue jclass slots ja-task-ID ------------------------------------------------------------------------------------------------------------------------------------------------ 9174218 0.50500 QRLOGIN xumj2019 r 10/13/2021 12:29:39 interactive.q@wrds-sas5-w.whar 1 -

Check the SAS log of the batched job:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183[xumj2019@wrds-cloud-login2-w ~]$ cat check WRDS_batch_ticker_ct.log 1 The SAS System Thursday, October 14, 2021 03:46:00 AM NOTE: Copyright (c) 2016 by SAS Institute Inc., Cary, NC, USA. NOTE: SAS (r) Proprietary Software 9.4 (TS1M7 MBCS3170) Licensed to UNIVERSITY OF PENNSYLVANIA - SFA T&R, Site 70055201. NOTE: This session is executing on the Linux 3.10.0-1160.36.2.el7.x86_64 (LIN X64) platform. NOTE: Analytical products: SAS/STAT 15.2 SAS/ETS 15.2 SAS/OR 15.2 SAS/IML 15.2 SAS/QC 15.2 NOTE: Additional host information: Linux LIN X64 3.10.0-1160.36.2.el7.x86_64 #1 SMP Thu Jul 8 02:53:40 UTC 2021 x86_64 Red Hat Enterprise Linux Server release 7.9 (Maipo) *** Welcome to Wharton Research Data Services (WRDS) *** WRDS is developed and run by the Wharton School at the University of Pennsylvania. We provide access to the databases that define empirical, seminal research in the fields of accounting, banking, economics, finance, insurance, management, marketing, public policy, risk management, and statistics. If you are not an authorized user of this system, disconnect now. All activity is strictly monitored. There may be severe criminal and civil penalties for unauthorized access or use of computing resources. Please report any problems to wrds-support@wharton.upenn.edu. ---------------------------------------------------------------------------- *** Wharton Research Data Services (WRDS) on SSRN *** Submit to the WRDS Research Paper Series (RPS) on SSRN – a searchable repository of all papers submitted to SSRN that cite WRDS in their work. This RPS will elevate researchers’ visibility, increase your Eigenfactor® score, and build a specialized research base for others to explore easily. View Abstracts: http://bit.ly/2qOrt9f Submit to the WRDS RPS: http://bit.ly/2rjrENk ------------------------------------------------------------------------------ *** NEW Learning Resources for Researchers, Instructors, and Librarians *** Curated, guided, self-paced, competency-based access to our data, analytics, research, and technology solutions. In the classroom or at home, for 25+ years Wharton Research Data Services (WRDS) has supported users with targeted solutions that underpin research, reinforce learning, and enable discovery. Visit the WRDS website www.whartonwrds.com to view the full suite of Learning Pathways. -------------------------------------------------------------------------------- 2 The SAS System Thursday, October 14, 2021 03:46:00 AM NOTE: SAS initialization used: real time 0.09 seconds cpu time 0.02 seconds NOTE: AUTOEXEC processing completed. 1 filename input '/home/frankfurt/xumj2019/evt_taqinput.txt'; /* Change to 1 ! your input file path */ 2 3 data dictionary2; 4 infile input dlm = " " dsd missover; 5 input smbl $ dates yymmdd8.; 6 run; NOTE: The infile INPUT is: Filename=/home/frankfurt/xumj2019/evt_taqinput.txt, Owner Name=xumj2019,Group Name=frankfurt, Access Permission=-rw-------, Last Modified=13Oct2021:16:31:41, File Size (bytes)=23 NOTE: 1 record was read from the infile INPUT. The minimum record length was 23. The maximum record length was 23. NOTE: The data set WORK.DICTIONARY2 has 1 observations and 2 variables. NOTE: DATA statement used (Total process time): real time 0.00 seconds cpu time 0.02 seconds 7 8 /* Genrate a macro variable to loop through */ 9 10 proc sql noprint; 11 select distinct dates into :datesValsM separated by ' ' /* select 11 ! unique dates that we want to extract from TAQ Monthly*/ 12 from work.dictionary2; 13 quit; NOTE: PROCEDURE SQL used (Total process time): real time 0.00 seconds cpu time 0.00 seconds 14 15 16 %put &datesValsM; 20062 17 18 /* A macro that autogenerated list of needed Monthly TAQ datasets */ 19 %macro taq_monthly_dataset_list(type = ); 20 %let type=%lowcase(&type); 21 %let i = 1; 22 %let datesValsMi = %scan(&datesValsM, &i); 23 /* Loop over each date in "datesVals" macro variable*/ 24 %do %while("&datesValsMi" ~= "");/** For each date in the 24 ! "datesVals" */ 25 %let yyyymmdd=%sysfunc(putn(&datesValsMi,yymmddn8.)); 26 /*If the corresponding dataset exists, add it to the list 26 ! */ 27 %if %sysfunc(exist(taq.&type._&yyyymmdd)) %then 27 ! taq.&type._&yyyymmdd; 28 %let i = %eval(&i + 1); 29 %let datesValsMi = %scan(&datesValsM, &i); 30 %end; 31 %mend; 32 33 34 * using this macro; 35 36 data outputM; 37 set %taq_monthly_dataset_list(type = ct) open=defer; /* get TAQ 37 ! Monthly data for desired dates */ 38 where (time between '9:30:00't and '10:30:00't) or (time between 38 ! '15:00:00't and '16:00:59't); 39 run; NOTE: There were 13197152 observations read from the data set TAQ.CT_20141205. WHERE ((time>=' 9:30:00'T and time<='10:30:00'T) or (time>='15:00:00'T and time<='16:00:59'T)); NOTE: The data set WORK.OUTPUTM has 13197152 observations and 9 variables. NOTE: DATA statement used (Total process time): real time 2.63 seconds cpu time 2.45 seconds 40 41 proc sql; 42 create table outputx as select a.*, b.* 43 from work.dictionary2 a, outputM b 44 where a.smbl = b.symbol; NOTE: Table WORK.OUTPUTX created, with 94272 rows and 11 columns. 45 quit; NOTE: PROCEDURE SQL used (Total process time): real time 0.33 seconds cpu time 0.33 seconds 46 47 48 /* optional: export sas7bat file to dta file */ 49 proc export data = outputM outfile= 49 ! '/home/vu/frankfurt/xum2019/ticker_ct1014.dta'; run; NOTE: The export data set has 13197152 observations and 9 variables. NOTE: "/home/frankfurt/xum2019/ticker_ct1014.dta" file was successfully created. NOTE: PROCEDURE EXPORT used (Total process time): real time 2.81 seconds cpu time 2.01 seconds 50 NOTE: SAS Institute Inc., SAS Campus Drive, Cary, NC USA 27513-2414 NOTE: The SAS System used: real time 6.63 seconds cpu time 4.99 seconds -

Check the output of your batched job. Enter

ls, you will find the output fileticker_ct.dtahas appeared in your home directory.

References

- PYTHON: On the WRDS Cloud

- Submitting Python Jobs

- Login to the WRDS cloud via ssh

- Interactive Jobs on the WRDS Cloud

- Querying WRDS Data using Python

- Example Python Data Workflow

- Storing Your Data on the WRDS Cloud

- Transfering Data with Dropbox

- Storing Your Data on the WRDS Cloud

- Graphing Data using Python

- Transferring Files using SCP

- Batch Jobs on the WRDS Cloud

- Extract TAQ data from WRDS Cloud via terminal