I. Motivation

High-frequency traders (HFTs) are market participants that are characterized by the high speed (typically in milliseconds level) with which they react to incoming news, the low inventory on their books, and the large number of trades they execute (SEC, 2010). According to Breckenfelder (2019, WP), The high-frequency trading industry grew rapidly since its inception in the mid-2000s and has represented about 50% of trading in US equity markets by 2017 (down from a 2009 peak, when it topped 60%).

In academia, there is a decade-long debate regarding whether HFTs benefit or harm market quality. Some argues that HFTs increase price discovery by systematically correcting the in-attention of human traders and inducing the public information to be incorporated into the stock prices more promptly (e.g., Chordia and Miao, 2020 JAE; Bhattacharya et al., 2020 RAS). Others believe that the predatory behaviour of HFTs, which dampens other market participants’ information acquisition incentives, must have harmed market effieciency (e.g., Ahmed et al., 2020 JAR; Lee and Watts, 2021 TAR).

Either way, there are two things that can be assured.

- HFTs have played a big part in today’s capital market.

- To cope with today’s high-frequency capital market, scholars need to grapple with high-frequency trading data (typically in second-level before 2014 and millisecond level after 2014) to unravel the latest market micro-structure.

In this blogpost, I will introduce how to extract second/millisecond-level trade and quotes data from the WRDS-TAQ database. Such data is typically used for intra-day event studies (e.g., Rogers et al., 2016 RAS; Rogers et al., 2017 JAR). For people who are familar with the WRDS data structure, you can directly access the SAS code via this link .

II. Project Description

Project Purpose

I got time stamps for firm-related events and want to get all the trade and quotes records 15 miniutes before and after the event time. Like any other event studies, the events happened in different days.

Challenges

Due to the huge size of the everyday market order flows, WRDS-TAQ database saves the daily trade and quotes data separately for each day. That means if the events in our sample happened in 2000 unique trading days, we have to query 2000 different trade datasets and another 2000 different quote datasets in the WRDS-TAQ database.

To help the readers get an intuition about the size of the trade and quotes data, I extracted the trade records during two trading hours on December 5th, 2014. It appears that 13,197,152 trades were executed in that just two trading hours, not to mention the even (sometimes much more) bigger quote records.

III. Data Source: WRDS-TAQ database

Daily TAQ (Trade and Quote) provides users with access to all trades and quotes for all issues traded on NYSE, Nasdaq and the regional exchanges for the previous trading day. It’s a comprehensive history of daily activity from NYSE markets and the U.S. Consolidated Tape covering all U.S. Equities instruments (including all CTA and UTP participating markets). One can find more details about the WRDS-TAQ database in WRDS-TAQ User Guide. For those who are familiar with the TAQ database, you can jump via this link.

Markets Covered

The Daily TAQ database covers:

- All CTA Participating Markets – Tapes A, B and C

- NYSE

- Nasdaq (OTC)

- All Regionals Exchanges

Dataset Description

-

Daily TAQ Trades File (Daily @ 9:00 PM EST; History: 24 Apr 2002 – present.) A Daily TAQ Trade file is available as a separate file in WRDS which contains all trades reported to the consolidated tape at the same day. Each trade identifies the time, exchange, security, volume, price, salecondition, the exchange where the order is executed, etc.

-

Daily TAQ Quotes File (Daily @ 2:00 am EST; History: 11 Apr 2002 – present.) A Daily TAQ Quote file is available as a separate file in WRDS which contains all quotes reported to the consolidated tape at the same day. Each quote identifies the time, exchange, security, bid/offer volumes, bid/offer prices, NBBO indicator, etc.

Library and table names in WRDS

-

Until December 2014, the WRDS trade and quotes data is mainly in second-level (the millisecond level data is technically available in WRDS since 2002 but many institutions don’t subscribe the before-2015 millisecond level data). WRDS-TAQ database saves the second-level data with table format like

-

TAQ.CT_20130305 for second-level trade record,

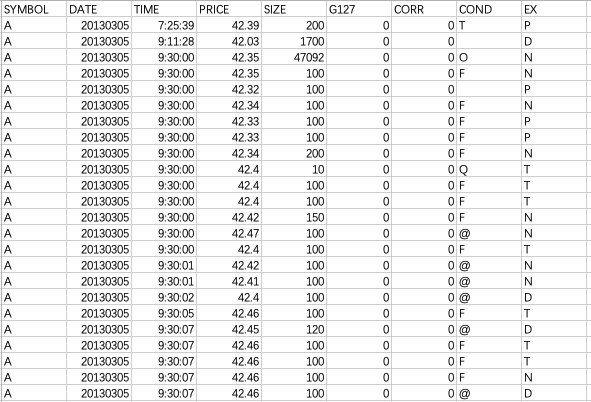

Figure 1: Second-level TAQ Trades Sample Data (TAQ.CT_20130305) -

and TAQ.CQ_20130305 for second-level quote record.

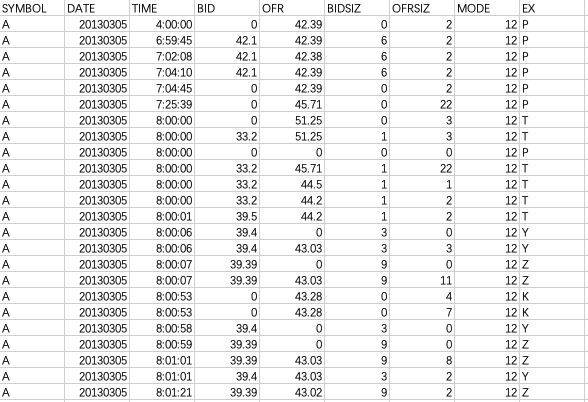

Figure 2: Second-level TAQ Quotes Sample Data (TAQ.CQ_20130305)

-

-

Since 2015, the millisecond-level trade and quotes data has been prevailed. To distinguish the millisecond-level data from the second-level data, WRDS-TAQ database saves the millisecond-level data with format like

-

TAQMSEC.CTM_20150305 for millisecond-level trade record,

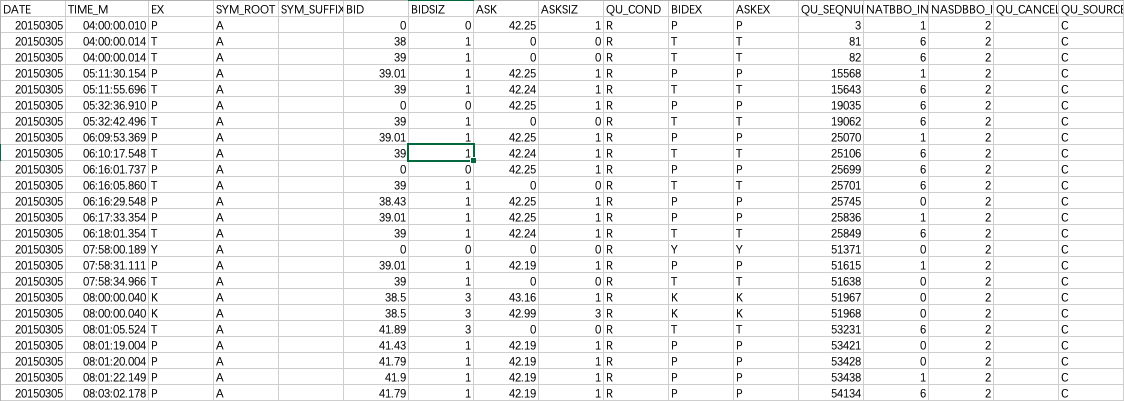

Figure 3: Millisecond-level TAQ Trades Sample Data (TAQMSEC.CTM_20150305) -

TAQMSEC.CQM_20150305 for millisecond-level quote record

Figure 4: Millisecond-level TAQ Quotes Sample Data (TAQMSEC.CQM_20150305)

-

-

Compared with second-level data, the millisecond-level dataset provides more abundent information in at least ways:

- The time precision is improved from second-level (07:25:39) to millisecond-level (07:25:39.105)

- Each order is assigned with an tracking-ID, which lends significant convenience for identifying the subsequent activities (e.g., whether the order is cancelled later) after the order is placed.

IV. Road Map

Step 0. Connect to WRDS server via PC SAS

In this blog post, I connect the WRDS server via the PC SAS because it helps to monitor the output from the server and finetune the codes more efficiently. One can also find how to access the WRDS server via SSH and submit the batched job in my previous blog post Exploit WRDS Cloud via Python.

Step 1. Import the events into SAS work library

First, you need to import your event sample into the SAS work library. Here I use a small sample named as “requestsample.csv”. It contains 999 unique events from 18 distinct days.

Code

Output

|

|

Step 2. Put all the unique dates into a Macro variable

Let’s name the macro variable as datesValsM. This step prepares for the later loop procedure.

|

|

Step 3: Request trade and quotes data from WRDS server

To efficiently implement this procedure, I wrote three macros

- %getdict(yyyymmdd, type);

-

The purpose of this macro is to get the table name

datasetthat should be requested (e.g., TAQ.CQ_20130305) in the WRDS server based on the event dateyyyymmdd(e.g., 20130305) and table typetype(ct or cq). The name of the respect output tableoutnamewill also be determined (e.g., taqout_cq_29130305) -

This is because apart from the exact trading date, there are two extra parameters to decide when picking the exact table that we request data from:

- The library name -

TAQif the trading date is before 2014.12.31, andTAQMSECotherwise - The table name -

CT(inTAQ) orCTM(inTAQMSEC) for trades data;CQ(inTAQ) orCQM(inTAQMSEC) for quotes data

- The library name -

-

To make sure the table name

dataset(which we request data from) and the table nameoutname(which we save output data) can be referenced by other macros, I define those two variables as global macro variables.

|

|

- %getdaily(date, dataset, outname, start, end);

- The idea is to

- select out all the events that happened in a specified date

datefrom the whole sample, - submit query to the table whose name

datasethas been decided by the first macro%getdict(yyyymmdd, type), - require that the trading time should no earlier than the event time minus

startnumber of seconds and no later than the event time plusendnumber of seconds, - Download the query results and save the output data as

outname, which is also determined by the previous macro%getdict(yyyymmdd, type)

- select out all the events that happened in a specified date

|

|

- %iterate(type, start, end);

- With the previous two macros, we have finished the query for a single date. The only left thing is to iterate the query over all the unique days in our sample.

- This step is like a wrapper for our single-date query and thus needs to utilize the previous two queries.

- The three parameters in this macro are the very input parameters for our little high-frequency query project

- Type -

ctfor trades data query,cqfor quotes data query - Start - The

Startnumber of seconds before the event time would be the beginning of the window. For example, I assignStartas 900 because I want the query window to start at 15 minutes before the event time. - End - The

Endnumber of seconds after the event time would be the beginning of the window. For example, I assignEndas 900 because I want the query window to end at 15 minutes after the event time.

- Type -

|

|

V. Output

The output dataset are saved separately for each date, named as the way we have determined with the macro %getdict(yyyymmdd, type).

The dataset looks like as following.

VI. SAS Code

|

|

Main References

Ahmed, A. S., Li, Y., Xu, N. (2020). Tick size and financial reporting quality in small‐cap firms: Evidence from a natural experiment. Journal of Accounting Research, 58(4), 869-914.

Bhattacharya, N., Chakrabarty, B., Wang, X. F. (2020). High-frequency traders and price informativeness during earnings announcements. Review of Accounting Studies, 25(3), 1156-1199.

Breckenfelder, J. (2019). Competition among high-frequency traders, and market quality.

Charles M. C. Lee, Edward M. Watts; Tick Size Tolls: Can a Trading Slowdown Improve Earnings News Discovery?. The Accounting Review 1 May 2021; 96 (3): 373–401.

Chordia, T., Miao, B. (2020). Market efficiency in real time: Evidence from low latency activity around earnings announcements. Journal of Accounting and Economics, 70(2-3), 101335.

Rogers, J. L., Skinner, D. J., Zechman, S. L. (2016). The role of the media in disseminating insider-trading news. Review of Accounting Studies, 21(3), 711-739.

Rogers, J. L., Skinner, D. J., Zechman, S. L. (2017). Run EDGAR run: SEC dissemination in a high‐frequency world. Journal of Accounting Research, 55(2), 459-505.

SEC (2010). Concept release on equity market structure. concept release 34-61358, FileNo, 17 CFR Part 242 RIN 3235–AK47.

https://wrds-www.wharton.upenn.edu/documents/774/Daily_TAQ_Client_Spec_v2.2a.pdf